- CPU size can be greatly reduced which causes its price to get lower

- Processor without explicit floating point unit uses much less energy

- It can dramatically improve computing speed, so that application can spend more time in a sleep mode

However, developing a program in fixed point arithmetic might be really problematic. Main disadvantage is that mathematical operations results are prone to suffer from range overflows or underflows. Taking sufficient precautions requires the developer to perform variables static range analysis and scale them when necessary. As a result development time is increased, especially for inexperienced programmers.

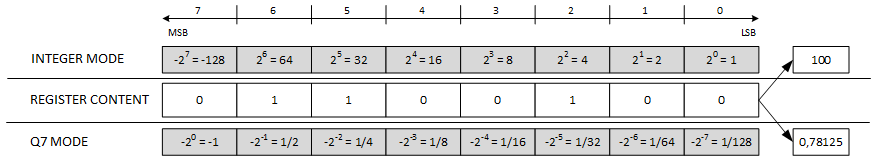

In fixed point arithmetic numbers are most commonly represented in QX.Y format, where X denotes number of bits allocated for integer part and Y for fractional part. Common practice, especially in digital signal processing applications, is to completely resign from integer part in favor of fractional part resolution increase. Anyway, storing a fixed point variable is not different from holding any other binary value. In fact, it is just a matter of how we interpret registered content. Below is an example for one byte width register in Q7 format (one sign bit, 7 fractional bits).

Inplementation of basic mathematical operations is also quite easy to understand. Addition and subtraction require only that both terms have the same Q format, and then a result can be evaluated in program exactly as for any other binary numbers with standard operators. Things get a little bit more complicated as far as multiplication is concerned. There is no constraint regarding factors Q format equal alignment. Multiplying QA.B by QC.D will basically give a result in Q(A+C).(B+D) which means that it requires twice more space in memory. If you want to perform a Q back conversions, you have to modify the variable by performing bitwise shift operations. For example, let’s consider two Q15 numbers multiplication in C:

int16_t a, b, res; /* Factors and result in Q15 format */ int32_t acc; /* Accumulator for storing temporary multiplication result */ acc = (int32_t)a*b; res = (int16_t)(acc >> 15);

Multiplying two Q15 numbers gives a temporary Q30 result. Two most significant bits store the same information about its sign. Back conversion is next performed by discarding 15 least significant bits by a shift operation. It should be also mentioned that some microprocessors with DSP specific instruction set can additionally shift accumulator content one bit to the left automatically to remove extra sign when proper configuration flag is set. In that case the acc variable needs to be shifted 16 bits to obtain a valid result. Once again systems which support DSP operations would do this in more efficient way by directly copying upper 16 bits from accumulator to destination address in memory. For reference in C55 family of Texas Instruments fixed point DSP processors fractional multiplication with additional saturation control can be triggered by following compiler intrinsic:

acc = _lsmpy(a, b); res = (int16_t)(acc >> 16);

Next step in developing fixed point compatible applications is to build mathematical function library which is at least capable of replacing standard library “math.h”. Fortunately, there’s no need to write functions from scratch as there are many third-party libraries available for free download. Let’s just mention C64x IQMath and DSPLIB dedicated for TI processors, CMSIS DSP for ARM Cortex CPU or cross platform libfixmath.

It is still quite possible that chosen library lacks some functionality crucial for developed application. In this situation it’s worth remembering that all standard implementations are based on numerical methods. For an in-depth look let’s focus on implementing cosine function that is compatible with Q15 data format. We begin by scaling down input argument by PI to satisfy input range constraint. Next we use approximation technique to evaluate function result by applying following polynomial:

![]()

It’s really accurate but only for x in <0, 0.5> range. We can easily solve this problem by making use of cosine symmetric properties. Instead of scaling polynomial coefficients, it’s better to encode them in Q3.12 format which offers us <-8, 8) data range. Finally, we can additionally optimize number of calculations which must be performed by rewriting equation to following one:

![]()

Polynomial evaluation is now performed in 5 “multiply and accumulate” operations and each of them would take only one cycle on microcontrollers with MAC hardware block. For more information you can check Real-time Digital Signal Processing by Sen M. Kuo, Bob H. Lee and Wenshun Tian.

Benchmark tests run on C55 show that computing a single cosine value for a given argument takes less than 30 cycles (taking into account auxiliary register preparations). It would also be valuable for you to bear in mind that emulation of floating point calculations for “math.h” cos() call would require approximately 3000 clock cycles on the same platform – definitely too much for a low-power system.